Lookup Tables

Lookup tables allow you to look up, map, and translate message field values into new values and write them into new message fields or overwrite existing fields. As a simple example, you could use a static CSV file to map IP addresses to hostnames.

Components

Lookup tables consist of four components:

- Data adapters

- Caches

- Lookup tables

- Lookup results

Data Adapters

Data adapters are used to do the actual lookup for a value from a connected external source. They might read from a CSV file, connect to a database, or execute requests to receive the lookup result. When you create a data adapter, you select the type and enter connection information so Graylog can retrieve requested information.

Data adapter implementations are pluggable and new ones can be added through plugins.

On the Data Adapters tab, a table view shows all data adapters you have created. Select a data adapter title to open the sidebar view, which shows details for that data adapter. For some data adapter types, you can take actions from the sidebar, such as performing a test look up.

Caches

Caches are responsible for caching the lookup results to improve lookup performance as well as to avoid overloading databases and APIs. Caches are separate entities so that you can reuse a cache implementation for different data adapters. Therefore, data adapters do not have to manage and implement their own caching.

Cache implementations are pluggable and new ones can be added through plugins.

On the Caches tab, a table view shows all caches you have created. Select a cache title to open the sidebar view, which shows details for that cache.

Lookup Tables

The lookup table component ties a data adapter instance and a cache instance together. Use lookup tables to convert values in converters, pipeline functions, and decorators.

On the Lookup Tables tab, a table view shows all lookup tables you have created. Select a lookup table title to open the sidebar view, which shows details for that lookup table. You can take actions from the sidebar, such as purging the cache (either the entire cache or individual entries by key).

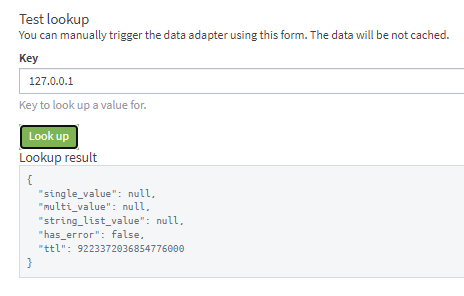

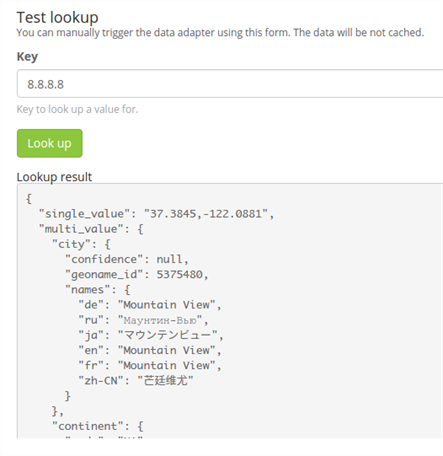

For some lookup tables, you can enter a key to preview its value as a test. The returned value displays the JSON structure of the returned data. This feature is available only for CSV File and MongoDB data adapters.

Lookup Results

The lookup result is returned by a lookup table through the data adapter and can contain two types of data: a single value or a multi value.

The single value can be a string, number, or boolean. You can use a single value in converters, decorators, and pipeline rules. For example, if you have a CSV file to map IP addresses to hostnames, the single value result would be the hostname string.

A multi value is a map or dictionary-like data structure that can contain multiple values. This type is useful if the data adapter can provide multiple values for a key. A good example for this is the geo-ip data adapter. This data adapter provides the latitude and longitude for an IP address as well as information about the city and country of the location. You can only use the multi value in a pipeline rule with the lookup() pipeline function.

Example 1: Output for a CSV data adapter including a single value and a multi value.

Example 2: Output for the geo-ip data adapter including a single value and a multi value.

Setup a Lookup Table

To configure lookup tables, go to System > Lookup Tables.

You need at least one data adapter and one cache to create a lookup table, and you can create these components during the lookup table creation workflow described here. Data adapter and caches can also be created separately on their respective tabs.

Create a Lookup Table

To create a lookup table from the Lookup Tables tab:

-

Click Create lookup table.

-

Fill in the required fields and optional fields as necessary:

Title

Enter a short title for this lookup table.

Description(optional)

Enter a description of the lookup table.

Name

Enter the name used to refer to this lookup table. This value must be unique.

Enable single default value

Select this checkbox if the lookup table should provide a default value for the single value. Then, select the value type from the dropdown and enter the value.

Enable multi default value

Select this checkbox if the lookup table should provide a default value for the multi value. Then, select the value type from the dropdown and enter the value.

- Select the data adapter to use for this lookup table. If you have not created a data adapter or you want to use a new data adapter for this lookup table, click Create Data Adapter. See the Create Data Adapter section below for details.

-

Select the cache instance to use for this lookup table. If you have not created a cache or you want to use a new cache for this lookup table, click Create Cache. See the Create Cache section below for details.

-

Click Create lookup table.

The new lookup table is added to the list on the Lookup Tables tab.

Create a Data Adapter

You can start this process from within the Create a Lookup Table workflow described above or separately from the Data Adapters tab.

-

Click Create Data Adapter.

-

Select a data adapter type.

Each data adapter type includes specific fields that you need to configure. Check the specific data adapter documentation for details.

-

Click Create adapter.

Set TTL Option for Data Adapters

Lookup tables accumulate entries such as policy violations or threat intelligence feeds that can become irrelevant over time. In such cases, best practice is to choose the time to live (TTL) option on the data adapter configuration page to automatically delete old entries.

Each data adapter type has the Custom Error TTL setting. The default value is 5 seconds. Enable the checkbox to set a custom value. If you create multiple adapters of the same type, each one can have a different TTL value.

A sample use case of the TTL option would be Indicators of Compromise (IoC) lists. These lists are made up of forensic evidence of security threats such as dubious logins or unusual traffic going in or out of a network. IOCs appear and disappear over time and indicators that were critical in the past can lose importance over time. Since IOC lists are constantly updated, selecting the TTL option would be beneficial in this case.

Another scenario might be an account lockout situation. Providing a list of users who have updated their passwords in the past 72 hours would be beneficial in this case. If these users get locked out of their account, the presence of their username on a lookup table would enable analysts to treat these lockouts differently from users who have not changed their passwords recently.

Create Cache

You can start this process from within the Create a Lookup Table workflow described above or separately from the Caches tab.

-

Click Create Cache.

-

Select a cache type:

-

Do not cache values: Allows you to define a cache that does not cache values.

-

Node-local, in-memory cache: Allows you to define a cache that is local to each Graylog server. For example, if you have three servers, each server maintains an independent cache. They do not share entries.

-

-

Enter the required information to configure the cache. Note that only Title, Description, and Name are used for the "no cache" type, while all other fields apply only to the in-memory cache type.

Title

Enter a short title for this cache.

Description (optional)

Add information to help identify the purpose of this cache.

Name

Enter a name by which to refer to this cache. Must be unique. Note that this value is created automatically from the Title. You can change the value if you need to set it to something different.

Maximum entries

Set a value for the maximum number of entries the cache keeps in memory.

Expire after access

Use this setting to remove entries from the cache if they haven't been accessed for the specified amount of time. This option is selected by default with a time value of 60 seconds.

Expire after write

Use this setting to remove entries from the cache the specified interval after they were first added to the cache. This option is not selected by default. Enter the time value if you choose to enable the feature.

Ignore empty results

Enable this option if you want null values in results to be ignored and not cached.

TTL for empty results

Enter the time to live (TTL) value for empty results. Empty results are removed from the cache after the specified time.

-

Click Create cache.

Implement Lookup Tables

Lookup tables can be used with the following Graylog components.

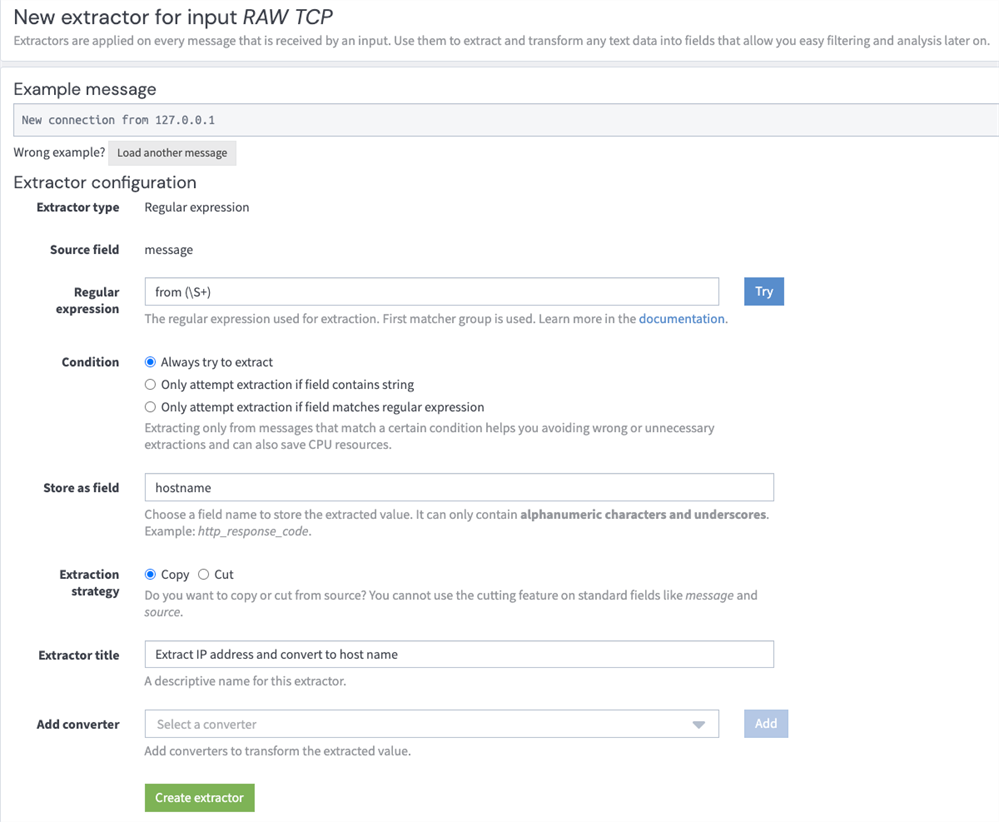

Converters

When you use an extractor to get values out of a text message, you can use a lookup table converter to do a lookup on the extracted value.

Decorators

A lookup table decorator can be used to enrich messages by looking up values at search time.

Pipeline Rules

There are two lookup functions you can use in a pipeline rule, lookup(), and lookup_value(). The first returns the multi value data of the lookup result, and the second returns the single value.

curl -u d2tirtpunshmgdsbq5k3j0g4ku230ggruhsqpa0iu7mj1lia55i:token \

-H 'X-Requested-By: cli' -H 'Accept: application/json' \

-X POST 'http://127.0.0.1:9000/api/plugins/org.graylog.plugins.lookup/lookup/adapters/mongodb/mongodb-data-name' \

-H 'Content-Type: application/json' \

--data-binary $'{\n"key": "myIP",\n"values:["12.34.42.99"],\n"data_adapter_id":"5e578606cdda4779dd9f2611"\n}'