We strongly recommend that you use a dedicated Elasticsearch cluster for your Graylog setup.

If you are using a shared Elasticsearch setup, a problem with indices unrelated to Graylog might turn the cluster status to YELLOW or RED and impact the availability and performance of your Graylog setup.

Elasticsearch Versions

Starting with version 2.3, Graylog uses the HTTP protocol to connect to your Elasticsearch cluster, so it does not have a hard requirement for the Elasticsearch version anymore. We can safely assume that any version from 2.x onwards works.

| Graylog Version | Elasticsearch Version |

|---|---|

| 1.2.0-1.2.1 | 1.7.1 |

| 1.3.0-1.3.3 | 1.7.3 |

| 1.3.4 | 1.7.5 |

| 2.0.0 | 2.3.1 |

| 2.0.1-2.0.3 | 2.3.2 |

| 2.1.0-2.1.3 | 2.3.5 |

| 2.2.0-2.2.3 | 2.4.4 |

| 2.3.x-2.4.x | 2.4.x, 5.6.x |

|

2.5.x |

2.4.x, 5.6.x, 6.8.x |

|

3.0-3.3 |

5.6.x, 6.8.x |

|

4.x |

6.8.x, 7.7.x-7.10.x |

Configuration

Graylog

The most important setting to make a successful connection is a list of comma-separated URIs to one or more Elasticsearch nodes. Graylog needs to know the address of at least one other Elasticsearch node given in the elasticsearch_hosts setting. The specified value should at least contain the scheme (http:// for unencrypted, https:// for encrypted connections), the hostname or IP and the port of the HTTP listener of this node (which is 9200 unless otherwise configured). Optionally, you can also specify an authentication section containing a user name and a password, if either of your Elasticsearch nodes use Shield/X-Pack or Search Guard, or you have an intermediate HTTP proxy requiring authentication between the Graylog server and the Elasticsearch node. Additionally you can specify an optional path prefix at the end of the URI.

A sample specification of elasticsearch_hosts:

elasticsearch_hosts = http://es-node-1.example.org:9200/foo,https://someuser:somepassword@es-node-2.example.org:19200Graylog does not currently react to externally triggered index changes (creating/closing/reopening/deleting an index). These actions need to be performed through the Graylog REST API in order to retain index consistency.

Available Elasticsearch Configuration Tunables

The following configuration options are now being used to configure connectivity to Elasticsearch:

| Config Setting | Type | Comments | Default |

|---|---|---|---|

elasticsearch_connect_timeout

|

Duration | Timeout when connection to individual Elasticsearch hosts | 10s (10 Seconds) |

elasticsearch_hosts

|

List<URI> | Comma-separated list of URIs of Elasticsearch hosts | http://127.0.0.1:9200

|

elasticsearch_idle_timeout

|

Duration | Timeout after which idle connections are terminated | -1s (Never) |

elasticsearch_max_total_connections

|

int | Maximum number of total Elasticsearch connections | 20

|

elasticsearch_max_total_connections_per_route

|

int | Maximum number of Elasticsearch connections per route/host | 2

|

elasticsearch_socket_timeout

|

Duration | Timeout when sending/receiving from Elasticsearch connection | 60s (60 Seconds) |

elasticsearch_discovery_enabled

|

boolean | Enable automatic Elasticsearch node discovery | false

|

elasticsearch_discovery_default_user

|

String | The default username used for authentication for all newly discovered nodes. | empty (no authentication used for discovered nodes) |

|

|

String |

The default password used for authentication for all newly discovered nodes. |

empty (no authenticationused for discovered nodes) |

|

|

String |

The default scheme used for all newly discovered nodes. |

|

|

|

String |

Filter by node attributes for the discovered nodes |

empty (use all nodes) |

|

|

Duration |

Frequency of the Elasticsearch node discovery |

|

|

|

boolean |

Enable GZIP compression of Elasticseach request payloads |

|

|

|

String |

Major version of the Elasticsearch version used. If not specified, the version will be auto-sensed with the configured nodes. Will disable auto-sensing if specified. |

Values: |

|

|

boolean |

Enable muting of deprecation warnings for deprecated configuration settings in Elasticsearch. These warnings are attached as “Warnings” in HTTP-Response headers and might clutter up the logs. Works only with ES7. |

false |

|

|

int |

Maximum number of retries to connect to Elasticsearch on boot for the version probe before finally giving up. Use 0 to try until a connection can be made. |

|

|

|

Duration |

Waiting time in between connection attempts for elasticsearch_version_probe_attempts |

|

Automatic Version Sensing

We support multiple major versions of Elasticsearch (starting with Graylog 4.0) which are partially incompatible with each other (ES6 & ES7). Therefore, we need to know which Elasticsearch version is running in the cluster. This is why we make a single request to the first reachable Elasticsearch node and parse the version of the response it sends back. There are a few things which could go wrong at this point. You might want to run an unsupported version. If you feel comfortable doing so, you can set the elasticsearch_version configuration variable. It will disable auto-sensing and force Graylog to pretend that this Elasticsearch major version is running in the cluster. It will load the corresponding support module.

Automatic Node Discovery

Graylog uses automatic node discovery to gather a list of all available Elasticsearch nodes in the cluster at runtime and distributes requests among them to potentially increase their performance and availability. To enable this feature, you need to set the elasticsearch_discovery_enabled to true. Optionally, you can define a filter allowing to selectively include/exclude discovered nodes (details on how to specify node filters are found in the Elasticsearch cluster documentation) using the elasticsearch_discovery_filter setting, or by tuning the frequency of the node discovery using the elasticsearch_discovery_frequency configuration option. If your Elasticsearch cluster uses authentication, you need to specify the elasticsearch_discovery_default_user and elasticsearch_discovery_default_password settings. The username/password specified in these settings will be used for all nodes discovered in the cluster. If your cluster uses HTTPS, you also need to set the elasticsearch_discovery_default_scheme setting. It specifies the scheme used for discovered nodes and must be consistent across all nodes in the cluster.

Configuration of Elasticsearch Nodes

Control Access to Elasticsearch Ports

If you are not using Shield/X-Pack or Search Guard to authenticate access to your Elasticsearch nodes, make sure to restrict access to the Elasticsearch ports (default: 9200/tcp and 9300/tcp). Otherwise the data is readable by anyone who has access to the machine over a network.

Open File Limits

Because Elasticsearch has to keep a lot of files open simultaneously it requires a higher open file limit than most operating system defaults allow. Set it to at least 64000 open file descriptors.

Graylog will show a notification in the web interface when there is a node in the Elasticsearch cluster which has an open file limit that is too low.

Read about how to raise the open file limit in the corresponding 5.x, 6.x, and 7.x documentation pages.

Heap Size

We strongly recommended that you raise the standard size of heap memory allocated to Elasticsearch. For example, set the ES_HEAP_SIZE environment variable to 24g to allocate 24GB. We also recommend using around 50% of the available system memory for Elasticsearch (when running on a dedicated host) to leave enough space for the system caches that Elasticsearch uses to a great extent. But please take care that you don’t exceed 32 GB!

Merge Throttling

Elasticsearch throttles the merging of Lucene segments to allow extremely fast searches. This throttling however has default values that are very conservative and can lead to slow ingestion rates when used with Graylog. You can see the message journal growing without any real indication of CPU or memory stress on the Elasticsearch nodes. It usually shows up in Elasticsearch INFO log messages like this:

now throttling indexing When running on fast IO like SSDs or a SAN we recommend increasing the value of the indices.store.throttle.max_bytes_per_sec in your elasticsearch.yml to 150MB:

indices.store.throttle.max_bytes_per_sec: 150mbPlay around with this setting until you reach the best performance.

Tuning Elasticsearch

Graylog sets specific configurations for every index it manages. This tuning is sufficient for a lot of use cases and setups.

More detailed information about Elasticsearch configurations can be found in the official documentation.

Avoiding Split-Brain and Shard Shuffling

Split-Brain Events

Elasticsearch sacrifices consistency in order to ensure availability and partition tolerance. The reasoning behind this is that short periods of misbehavior are less problematic than short periods of unavailability. In other words, when Elasticsearch nodes within a cluster are unable to replicate changes to data, they will keep serving applications such as Graylog. When the nodes are able to replicate their data, they will attempt to converge the replicas and achieve eventual consistency .

Elasticsearch tackles the previous by electing leader nodes, which are in charge of database operations such as creating new indices, moving shards around the cluster nodes and so forth. Leader nodes coordinate their actions actively with others, ensuring that the data can be converged by non-leaders. The cluster nodes that are not leader nodes are not allowed to make changes that would break the cluster.

The previous mechanism can in some circumstances fail, causing a split-brain event. When an Elasticsearch cluster is split into two sections which work on the data independently, data consistency is lost. As a result nodes will respond differently to the same queries. This is considered a catastrophic event because the data originating from the two leaders can not be rejoined automatically and it takes quite a bit of manual work to remedy the situation.

Avoiding Split-Brain Events

Elasticsearch nodes take a simple majority vote over who is leader. If the majority agrees on one, then most likely the disconnected minority will give in and everything will be just fine. This mechanism requires that at least 3 nodes work together, merely one or two nodes can not form a majority.

The minimum amount of leader nodes required to elect a leader must be configured manually in elasticsearch.yml:

discovery.zen.minimum_master_nodes is ignored if running Elasticsearch versions 7 and up. See the blog on Breaking changes in 7.0 for more details.# At least NODES/2+1 on clusters with NODES > 2, where NODES is the number of master nodes in the cluster

discovery.zen.minimum_master_nodes: 2An example of what configuration values should typically be:

| Leader Nodes | minimum_master_nodes

|

Comments |

|---|---|---|

| 1 | 1 | |

| 2 | 1 | With 2 the other nodes going down, this would stop the cluster from working! |

| 3 | 2 | |

| 4 | 3 | |

| 5 | 3 | |

| 6 | 4 |

Some of the leader nodes may be dedicated leader nodes, meaning that they are only configured to handle lightweight operational (cluster management) responsibilities. They will not be able to handle or store any of the cluster’s data. The function of such nodes is similar to so called witness servers on other database products. Setting them up on dedicated witness sites will greatly reduce the risk of Elasticsearch cluster instability.

A dedicated leader node has the following configuration in elasticsearch.yml:

node.data: false

node.master: trueShard Shuffling

When the cluster status changes because of a node restart or availability issues, Elasticsearch will start automatically rebalancing the data in the cluster. The cluster works on making sure that the amount of shards and replicas will conform to the cluster configuration. This is a problem if status changes are just temporary. Moving shards and replicas around in the cluster takes up a considerable amount of resources and should be done only when necessary.

Avoiding Unnecessary Shuffling

Elasticsearch has a couple of configuration options which are designed to allow short times of unavailability before starting the recovery process with shard shuffling. There are 3 settings that may be configured in elasticsearch.yml:

# Recover only after the given number of nodes have joined the cluster. Can be seen as "minimum number of nodes to attempt recovery at all".

gateway.recover_after_nodes: 8

# Time to wait for additional nodes after recover_after_nodes is met.

gateway.recover_after_time: 5m

# Inform ElasticSearch about how many nodes form a full cluster. If this number is met, start up immediately.

gateway.expected_nodes: 10The configuration options should be set up so that only minimal node unavailability is tolerated. For example server restarts are common and should be managed. The logic is that if you lose large parts of your cluster, you should not tolerate the situation and you probably should start re-shuffling the shards and replicas.

Custom Index Mappings

Sometimes it’s better not to rely on Elasticsearch’s dynamic mapping. It's better to define a stricter schema for messages.

Graylog itself uses a default mapping which includes settings for the timestamp, message, full_message, and source fields of indexed messages:

$ curl -X GET 'http://localhost:9200/_template/graylog-internal?pretty'

{

"graylog-internal" : {

"order" : -1,

"index_patterns" : [

"graylog_*"

],

"settings" : {

"index" : {

"analysis" : {

"analyzer" : {

"analyzer_keyword" : {

"filter" : "lowercase",

"tokenizer" : "keyword"

}

}

}

}

},

"mappings" : {

"message" : {

"_source" : {

"enabled" : true

},

"dynamic_templates" : [

{

"internal_fields" : {

"mapping" : {

"type" : "keyword"

},

"match_mapping_type" : "string",

"match" : "gl2_*"

}

},

{

"store_generic" : {

"mapping" : {

"type" : "keyword"

},

"match_mapping_type" : "string"

}

}

],

"properties" : {

"gl2_processing_timestamp" : {

"format" : "yyyy-MM-dd HH:mm:ss.SSS",

"type" : "date"

},

"gl2_accounted_message_size" : {

"type" : "long"

},

"gl2_receive_timestamp" : {

"format" : "yyyy-MM-dd HH:mm:ss.SSS",

"type" : "date"

},

"full_message" : {

"fielddata" : false,

"analyzer" : "standard",

"type" : "text"

},

"streams" : {

"type" : "keyword"

},

"source" : {

"fielddata" : true,

"analyzer" : "analyzer_keyword",

"type" : "text"

},

"message" : {

"fielddata" : false,

"analyzer" : "standard",

"type" : "text"

},

"timestamp" : {

"format" : "yyyy-MM-dd HH:mm:ss.SSS",

"type" : "date"

}

}

}

},

"aliases" : { }

}In order to extend the default mapping of Elasticsearch and Graylog, you can create one or more custom index mappings and add them as index templates to Elasticsearch.

Let’s say we have a schema for our data like the following:

| Field Name | Field Type | Example |

|---|---|---|

http_method

|

keyword | GET |

http_response_code

|

long | 200 |

ingest_time

|

date | 2016-06-13T15:00:51.927Z |

took_ms

|

long | 56 |

This would translate to the following additional index mapping in Elasticsearch:

"mappings" : {

"message" : {

"properties" : {

"http_method" : {

"type" : "keyword"

},

"http_response_code" : {

"type" : "long"

},

"ingest_time" : {

"type" : "date",

"format": "strict_date_time"

},

"took_ms" : {

"type" : "long"

}

}

}

}Formatting the ingest_time field is described in Elasticsearch documentation under format mapping parameter. Also make sure to check Elasticsearch documentation for information on Field datatypes.

When Graylog creates a new index in Elasticsearch, it has to be added to an index template in order to apply additional index mapping. The Graylog default template (graylog-internal) has the lowest priority and Elasticsearch will merge it with the custom index template.

Creating a New Index Template

Save the following index template for the custom index mapping into a file named graylog-custom-mapping.json:

{

"template": "graylog_*",

"mappings" : {

"message" : {

"properties" : {

"http_method" : {

"type" : "keyword"

},

"http_response_code" : {

"type" : "long"

},

"ingest_time" : {

"type" : "date",

"format": "strict_date_time"

},

"took_ms" : {

"type" : "long"

}

}

}

}

} graylog-custom-mapping-7x.json.{

"template": "graylog_*",

"mappings": {

"properties": {

"http_method": {

"type": "keyword"

},

"http_response_code": {

"type": "long"

},

"ingest_time": {

"type": "date",

"format": "strict_date_time"

},

"took_ms": {

"type": "long"

}

}

}

}Finally, load the index mapping into Elasticsearch with the following command:

$ curl -X PUT -d @'graylog-custom-mapping.json' -H 'Content-Type: application/json' 'http://localhost:9200/_template/graylog-custom-mapping?pretty'

{

"acknowledged" : true

}Every Elasticsearch index created thereon, will have an index mapping consisting of the original graylog-internal index template and the new graylog-custom-mapping template:

$ curl -X GET 'http://localhost:9200/graylog_deflector/_mapping?pretty'

{

"graylog_3" : {

"mappings" : {

"message" : {

"dynamic_templates" : [

{

"internal_fields" : {

"match" : "gl2_*",

"match_mapping_type" : "string",

"mapping" : {

"type" : "keyword"

}

}

},

{

"store_generic" : {

"match_mapping_type" : "string",

"mapping" : {

"type" : "keyword"

}

}

}

],

"properties" : {

"full_message" : {

"type" : "text",

"analyzer" : "standard"

},

"http_method" : {

"type" : "keyword"

},

"http_response_code" : {

"type" : "long"

},

"ingest_time" : {

"type" : "date",

"format" : "strict_date_time"

},

"message" : {

"type" : "text",

"analyzer" : "standard"

},

"source" : {

"type" : "text",

"analyzer" : "analyzer_keyword",

"fielddata" : true

},

"streams" : {

"type" : "keyword"

},

"timestamp" : {

"type" : "date",

"format" : "yyyy-MM-dd HH:mm:ss.SSS"

},

"took_ms" : {

"type" : "long"

}

}

}

}

}

}Deleting Custom Index Templates

If you want to remove an existing index template from Elasticsearch, simply issue a DELETE request to Elasticsearch:

$ curl -X DELETE 'http://localhost:9200/_template/graylog-custom-mapping?pretty'

{

"acknowledged" : true

}After you’ve removed the index template, new indices will only have the original index mapping:

$ curl -X GET 'http://localhost:9200/graylog_deflector/_mapping?pretty'

{

"graylog_3" : {

"mappings" : {

"message" : {

"dynamic_templates" : [

{

"internal_fields" : {

"match" : "gl2_*",

"match_mapping_type" : "string",

"mapping" : {

"type" : "keyword"

}

}

},

{

"store_generic" : {

"match_mapping_type" : "string",

"mapping" : {

"type" : "keyword"

}

}

}

],

"properties" : {

"full_message" : {

"type" : "text",

"analyzer" : "standard"

},

"message" : {

"type" : "text",

"analyzer" : "standard"

},

"source" : {

"type" : "text",

"analyzer" : "analyzer_keyword",

"fielddata" : true

},

"streams" : {

"type" : "keyword"

},

"timestamp" : {

"type" : "date",

"format" : "yyyy-MM-dd HH:mm:ss.SSS"

}

}

}

}

}

}Additional information on Elasticsearch Index Templates can be found in the official Elasticsearch Template Documentation.

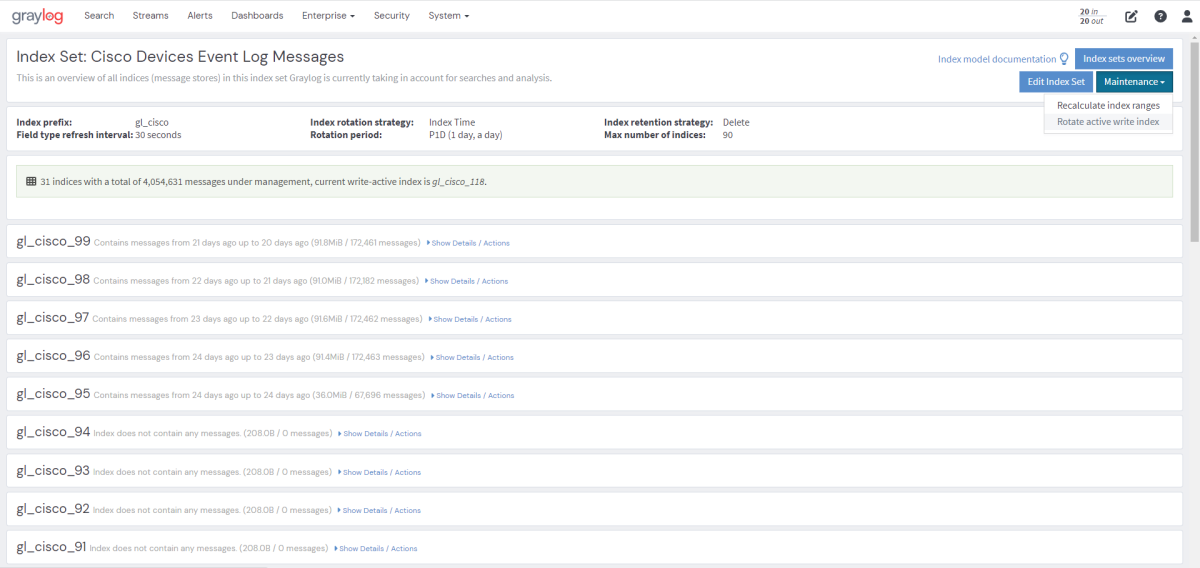

Rotate Indices Manually

Select the desired index set on the System / Indices page in the Graylog web interface by clicking on the name of the index set, then select “Rotate active write index” from the “Maintenance” drop-down menu.

Cluster Status Explained

Elasticsearch provides a classification for cluster health.

The cluster status applies to different levels:

-

Shard level - see status descriptions below

-

Index level - inherits the status of the worst shard status

-

Cluster level - inherits the status of the worst index status

That means that the Elasticsearch cluster status will turn red if a single index or shard has problems even though the rest of the indices/shards are okay.

Explanation of different status levels:

Red

The RED status indicates that some or all of the primary shards are not available.

In this state, no searches can be performed until all primary shards have been restored.

Yellow

The YELLOW status means that all of the primary shards are available but some or all shard replicas are not.

When the index configuration includes replications with a count that is equal or higher than the number of nodes, your cluster cannot become green. In most cases, this can be solved by adding another Elasticsearch node to the cluster or by reducing the replication factor of the indices.

Green

The cluster is fully operational. All primary and replica shards are available.